CV | Google Scholar | Github | X | Email |

Howdy! Welcome to my home page.👋 I am Tiansheng Wen, a final-year M.S. student at Xidian University, advised by Prof. Bo Chen. Currently, I am a Research Intern at Stony Brook University, working with Prof. Chenyu You. Also, I work closely with Prof. Stefanie Jegelka and Yifei Wang on developing efficient and scalable sparse methods. 🔥 I am seeking for PhD position in 26Fall.Please feel free to reach out to me via email if you believe I am a good fit for your research team. I welcome the opportunity for further discussion! Please see my CV for more details. |

Research Interests

My primary research goal is to develop scalable, reliable, and efficient methods for machine learning and generative AI, with a particular focus on sparsity, adaptive representation learning, and principled uncertainty estimation in foundation models—including LLMs, VLMs, and diffusion models. In addition, I am also highly interested in:

- 📚 Memorization in large models

- 🔄 Self-consuming/self-improving loops

- 🤖 Agent learning with Foundation models

If you share the same research interests, feel free to reach out or add my Wechat

News

- [10/2025] Join ByteDance Bandai as a Research Intern, targeting Deep Research!⚡️️⚡️

- [07/2025] The wait is over! CSR is now available in Sentence-Transformer v5.0! 🤗🤗

- [05/2025] Our paper CSR has been accepted at ICML 2025 (Oral)! 🎉🎉

- [03/2025] Our recent work Contrastive Sparse Representation has generated considerable interest as a promising alternative approach for efficient embedding retrieval, and we have been invited to publish the model on Hugging Face and Sentence-Transformer! Code available at Link. 🤗🤗

- [02/2025] Our paper LanCE has been accepted at CVPR 2025! 🎉🎉

- [07/2024] Our paper HICE-Score accepted by ACM MM 2024! 🎉🎉

Publications

|

Tiansheng Wen*, Yifei Wang*, Zequn Zeng, Zhong Peng, Yudi Su Xinyang Liu,Bo Chen, Hongwei Liu, Stefanie Jegelka, Chenyu You

ICML 2025

Oral Presentation

abstract |

paper |

Sentence-Transformer Blog |

code

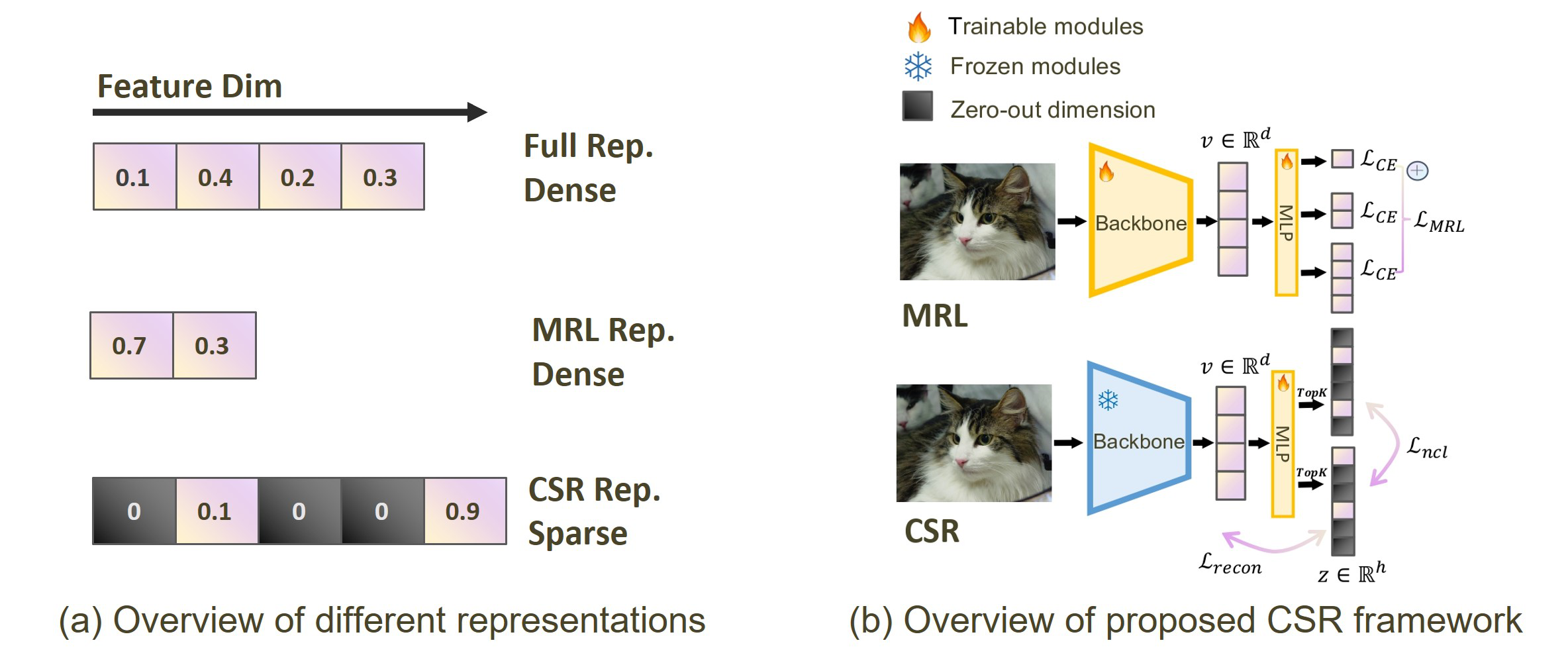

Many large-scale systems rely on high-quality deep representations (embeddings) to facilitate tasks like retrieval, search, and generative modeling. Matryoshka Representation Learning (MRL) recently emerged as a solution for adaptive embedding lengths, but it requires full model retraining and suffers from noticeable performance degradations at short lengths. In this paper, we show that sparse coding offers a compelling alternative for achieving adaptive representation with minimal overhead and higher fidelity. We propose Contrastive Sparse Representation (CSR), a method that sparsifies pre-trained embeddings into a high-dimensional but selectively activated feature space. By leveraging lightweight autoencoding and task-aware contrastive objectives, CSR preserves semantic quality while allowing flexible, cost-effective inference at different sparsity levels. Extensive experiments on image, text, and multimodal benchmarks demonstrate that CSR consistently outperforms MRL in terms of both accuracy and retrieval speed-often by large margins-while also cutting training time to a fraction of that required by MRL. Our results establish sparse coding as a powerful paradigm for adaptive representation learning in real-world applications where efficiency and fidelity are both paramount. |

|

|

Tiansheng Wen*, Yifei Wang*, Aosong Feng, Long Ma, Xinyang Liu, Yifan Wang, Lixuan Guo, Bo Chen, Stefanie Jegelka, Chenyu You

Preprint 2025

abstract |

paper |

code

Mixture-of-Experts (MoE) architectures scale large language models (LLMs) by activating only a subset of experts per token, but the standard TopK routing assigns the same fixed number of experts to all tokens, ignoring their varying complexity. Prior adaptive routing methods introduce additional modules and hyperparameters, often requiring costly retraining from scratch. We propose Sequence-level TopK (SeqTopK), a minimal modification that shifts the expert budget from the token level to the sequence level. By selecting the top T × K experts across all T tokens, SeqTopK enables end-to-end learned dynamic allocation -- assigning more experts to difficult tokens and fewer to easy ones -- while preserving the same overall budget. SeqTopK requires only a few lines of code, adds less than 1% overhead, and remains fully compatible with pretrained MoE models. Experiments across math, coding, law, and writing show consistent improvements over TopK and prior parameter-free adaptive methods, with gains that become substantially larger under higher sparsity (up to 16.9%). These results highlight SeqTopK as a simple, efficient, and scalable routing strategy, particularly well-suited for the extreme sparsity regimes of next-generation LLMs. |

|

Lixuan Guo*, Yifei Wang*, Tiansheng Wen*, Yifan Wang, Aosong Feng, Bo Chen, Stefanie Jegelka, Chenyu You

Preprint 2025

abstract |

paper |

code

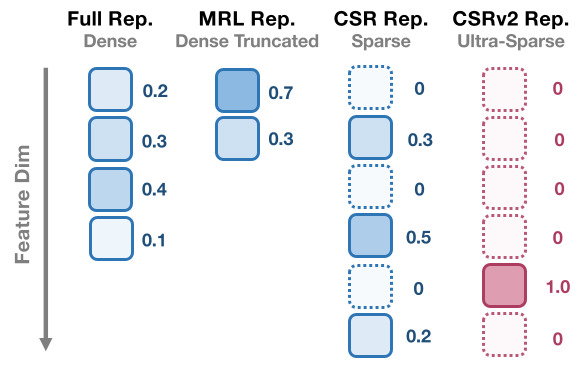

In the era of large foundation models, the quality of embeddings has become a central determinant of downstream task performance and overall system capability. Yet widely used dense embeddings are often extremely high-dimensional (e.g., 4096), incurring substantial costs in storage, memory, and inference latency. To address these, Contrastive Sparse Representation (CSR) is recently proposed as a promising direction, mapping dense embeddings into high-dimensional but k-sparse vectors, in contrast to compact dense embeddings such as Matryoshka Representation Learning (MRL). Despite its promise, CSR suffers severe degradation in the ultra-sparse regime (e.g., k ≤ 4), where over 80% of neurons remain inactive, leaving much of its efficiency potential unrealized. In this paper, we introduce CSRv2, a principled training approach designed to make ultra-sparse embeddings viable. CSRv2 stabilizes sparsity learning through progressive k-annealing, enhances representational quality via supervised contrastive objectives, and ensures end-to-end adaptability with full backbone finetuning. CSRv2 reduces dead neurons from 80% to 20% and delivers a 14% accuracy gain at k=2, bringing ultra-sparse embeddings on par with CSR at k=8 and MRL at 32 dimensions, all with only two active features. While maintaining comparable performance, CSRv2 delivers a {7× speedup over MRL}, and yields up to 300× improvements in compute and memory efficiency relative to dense embeddings. Extensive experiments across text (MTEB, multiple state-of-the-art LLM embeddings (Qwen and e5-Mistral-7B)) and vision (ImageNet-1k) demonstrate that CSRv2 makes ultra-sparse embeddings practical without compromising performance. By making extreme sparsity viable, CSRv2 broadens the design space for large-scale, real-time, and edge-deployable AI systems where both embedding quality and efficiency are critical. |

|

Zequn Zeng*, Yudi Su*, Jianqiao Sun, Tiansheng Wen, Hao Zhang, Zhengjue Wang, Bo Chen, Hongwei Liu, Jiawei Ma

CVPR 2025

abstract |

paper |

code

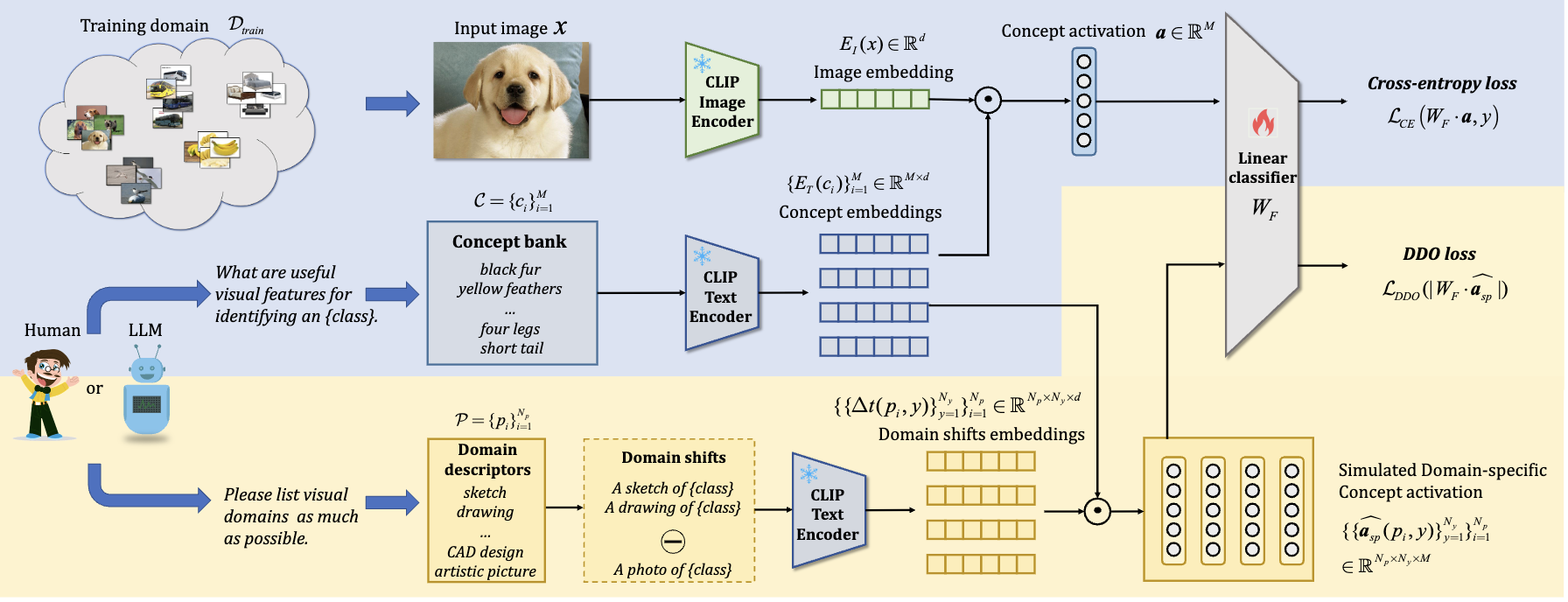

Concept-based models can map black-box representations to human-understandable concepts, which makes the decision-making process more transparent and then allows users to understand the reason behind predictions. However, domain-specific concepts often impact the final predictions, which subsequently undermine the model generalization capabilities, and prevent the model from being used in high-stake applications. In this paper, we propose a novel Language-guided Concept-Erasing (LanCE) framework. In particular, we empirically demonstrate that pre-trained vision-language models (VLMs) can approximate distinct visual domain shifts via domain descriptors while prompting large Language Models (LLMs) can easily simulate a wide range of descriptors of unseen visual domains. Then, we introduce a novel plug-in domain descriptor orthogonality (DDO) regularizer to mitigate the impact of these domain-specific concepts on the final predictions. Notably, the DDO regularizer is agnostic to the design of concept-based models and we integrate it into several prevailing models. Through evaluation of domain generalization on four standard benchmarks and three newly introduced benchmarks, we demonstrate that DDO can significantly improve the out-of-distribution (OOD) generalization over the previous state-of-the-art concept-based model. |

|

|

Zhibin Duan*, Tiansheng Wen*, Yifei Wang, Chen Zhu, Bo Chen, Mingyuan Zhou

Preprint 2024

abstract |

paper

Factor analysis, often regarded as a Bayesian variant of matrix factorization, offers superior capabilities in capturing uncertainty, modeling complex dependencies, and ensuring robustness. As the deep learning era arrives, factor analysis is receiving less and less attention due to their limited expressive ability. On the contrary, contrastive learning has emerged as a potent technique with demonstrated efficacy in unsupervised representational learning. While the two methods are different paradigms, recent theoretical analysis has revealed the mathematical equivalence between contrastive learning and matrix factorization, providing a potential possibility for factor analysis combined with contrastive learning. Motivated by the interconnectedness of contrastive learning, matrix factorization, and factor analysis, this paper introduces a novel Contrastive Factor Analysis framework, aiming to leverage factor analysis's advantageous properties within the realm of contrastive learning. To further leverage the interpretability properties of non-negative factor analysis, which can learn disentangled representations, contrastive factor analysis is extended to a non-negative version. Finally, extensive experimental validation showcases the efficacy of the proposed contrastive (non-negative) factor analysis methodology across multiple key properties, including expressiveness, robustness, interpretability, and accurate uncertainty estimation. |

|

Zequn Zeng, Jianqiao Sun, Hao Zhang, Tiansheng Wen, Yudi Su, Yan Xie, Zhengjue Wang, Bo Chen

ACM MM 2024

abstract |

paper |

code

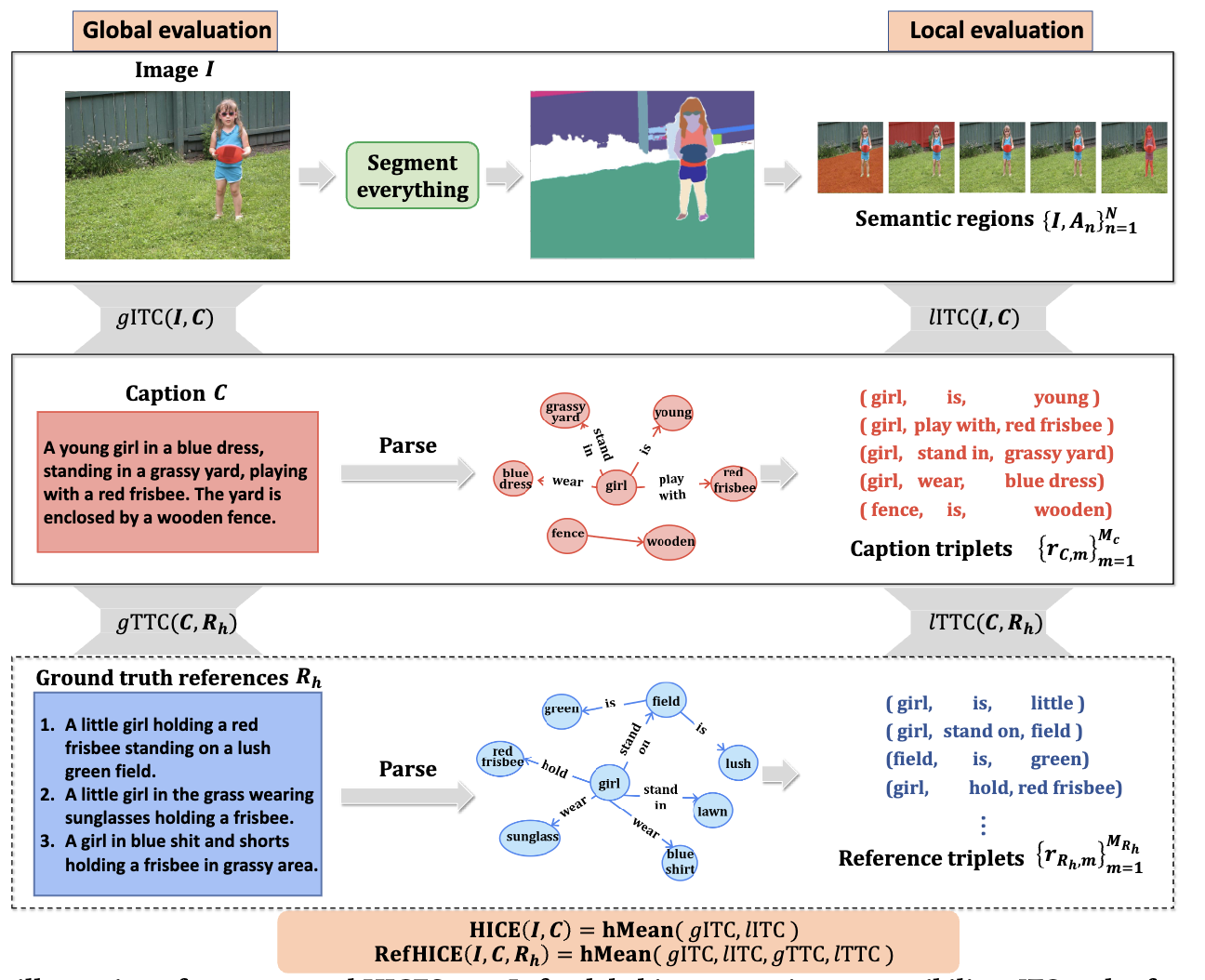

Image captioning evaluation metrics can be divided into two categories, reference-based metrics and reference-free metrics. However, reference-based approaches may struggle to evaluate descriptive captions with abundant visual details produced by advanced multimodal large language models, due to their heavy reliance on limited human-annotated references. In contrast, previous reference-free metrics have been proven effective via CLIP cross-modality similarity. Nonetheless, CLIP-based metrics, constrained by their solution of global image-text compatibility, often have a deficiency in detecting local textual hallucinations and are insensitive to small visual objects. Besides, their single-scale designs are unable to provide an interpretable evaluation process such as pinpointing the position of caption mistakes and identifying visual regions that have not been described. To move forward, we propose a novel reference-free metric for image captioning evaluation, dubbed Hierarchical Image Captioning Evaluation Score (HICE-S). By detecting local visual regions and textual phrases, HICE-S builds an interpretable hierarchical scoring mechanism, breaking through the barriers of the single-scale structure of existing reference-free metrics. Comprehensive experiments indicate that our proposed metric achieves the SOTA performance on several benchmarks, outperforming existing reference-free metrics like CLIP-S and PAC-S, and reference-based metrics like METEOR and CIDEr. Moreover, several case studies reveal that the assessment process of HICE-S on detailed captions closely resembles interpretable human judgments. Our code is available at https://github.com/joeyz0z/HICE. |

|

Zhibin Duan, Tiansheng Wen, Muyao Wang, Bo Chen, Mingyuan Zhou

Preprint 2024

abstract |

paper

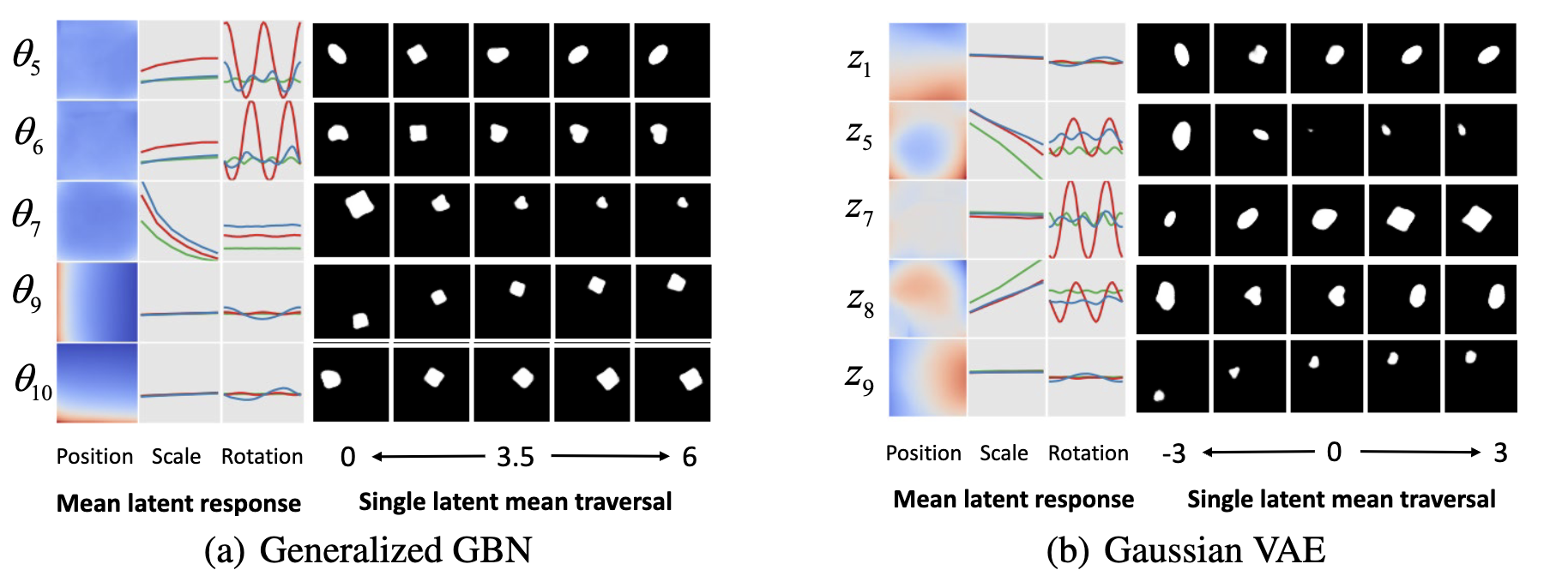

The gamma belief network (GBN), often regarded as a deep topic model, has demonstrated its potential for uncovering multi-layer interpretable latent representations in text data. Its notable capability to acquire interpretable latent factors is partially attributed to sparse and non-negative gamma-distributed latent variables. However, the existing GBN and its variations are constrained by the linear generative model, thereby limiting their expressiveness and applicability. To address this limitation, we introduce the generalized gamma belief network (Generalized GBN) in this paper, which extends the original linear generative model to a more expressive non-linear generative model. Since the parameters of the Generalized GBN no longer possess an analytic conditional posterior, we further propose an upward-downward Weibull inference network to approximate the posterior distribution of the latent variables. The parameters of both the generative model and the inference network are jointly trained within the variational inference framework. Finally, we conduct comprehensive experiments on both expressivity and disentangled representation learning tasks to evaluate the performance of the Generalized GBN against state-of-the-art Gaussian variational autoencoders serving as baselines. |

Professional Activity

|

Template from this awesome website. |